Tag: Featured

-

In the past decade, data teams have wrestled with a classic dilemma: how to combine the flexibility of a data lake with the reliability of a data warehouse. The answer that’s reshaping analytics, machine‑learning, and real‑time decision‑making is the Lakehouse. This hybrid architecture unifies storage, governance, and compute in a single platform, enabling organizations to treat all…

-

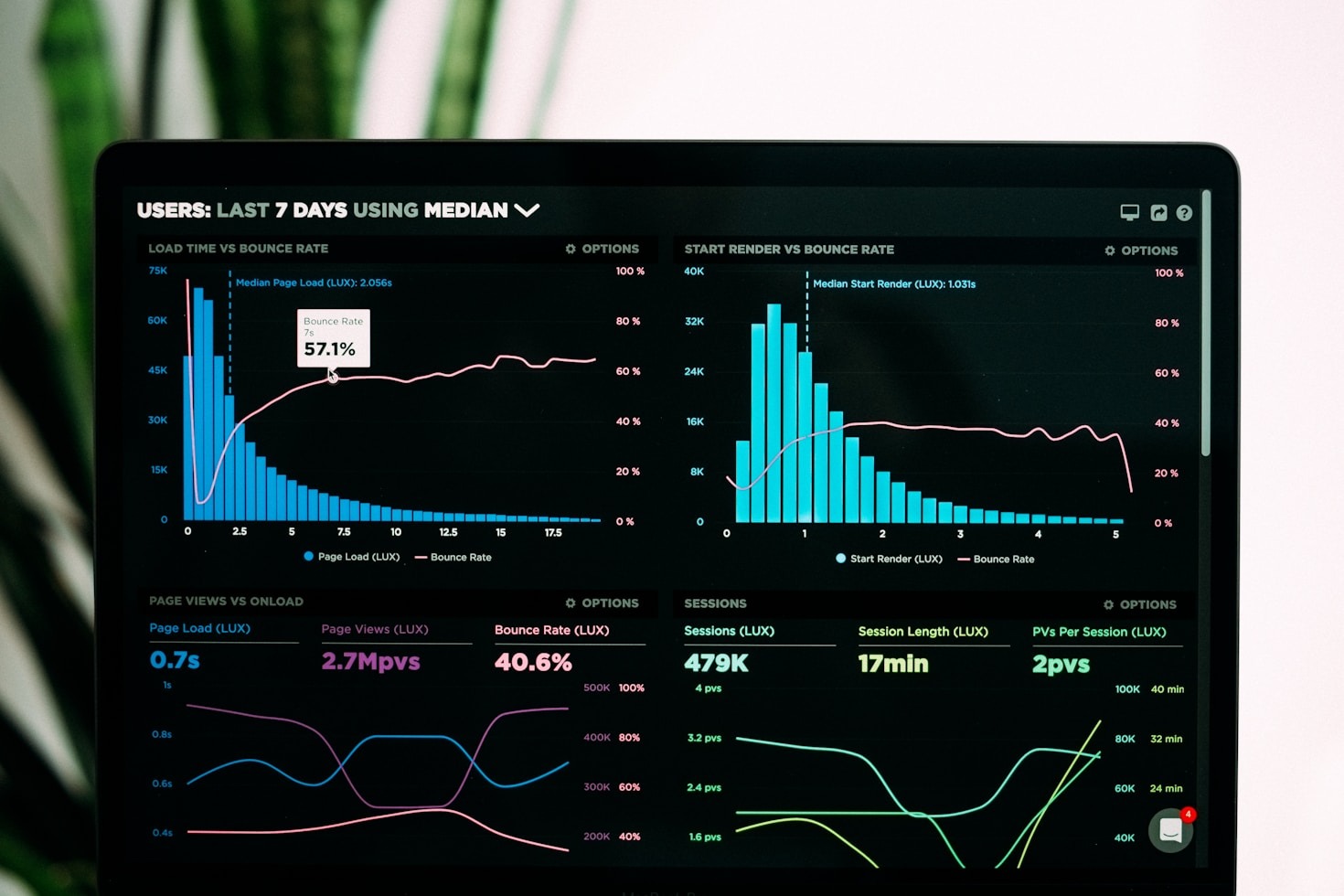

TL;DR – In 2025, data observability is no longer a nice‑to‑have; it’s a prerequisite for any data‑driven organization. By combining real‑time telemetry, AI‑driven anomaly detection, and a unified metadata layer, you can turn raw data pipelines into self‑healing, auditable systems that scale with your business. 1. Why Observability Matters Problem Impact Observability Solution Data quality drifts…

-

In today’s world, the ability to capture, process, and act on data as it arrives is no longer a luxury—it is a necessity. Whether you are building a recommendation engine that must respond to a user’s click in real time, monitoring sensor data from a fleet of vehicles, or feeding a fraud‑detection system with every…

-

In a world where data is the new oil, the pipelines that move, clean, and enrich that oil are the engines of modern business. A robust data pipeline is more than a collection of scripts; it is a resilient, observable, and maintainable system that can grow with your organization’s needs. Below are five foundational principles…