In a world where data is the new oil, the pipelines that move, clean, and enrich that oil are the engines of modern business. A robust data pipeline is more than a collection of scripts; it is a resilient, observable, and maintainable system that can grow with your organization’s needs. Below are five foundational principles that every data engineer should embed into their pipeline design to ensure reliability, scalability, and agility.

1. Treat Data as a First‑Class Asset

Why it matters

When data is treated as a product, it comes with clear ownership, quality expectations, and lifecycle policies. This mindset shifts the focus from “just moving data” to “delivering value through data.”

How to apply it

- Data Cataloging – Maintain a living catalog that records schema, lineage, and usage statistics.

- Stewardship – Assign domain experts to own specific datasets, ensuring accountability for quality and compliance.

- Versioning – Store data in a format that supports immutable snapshots (e.g., Delta Lake, Iceberg) so you can roll back or audit changes.

2. Embrace Declarative, Idempotent Transformations

Why it matters

Declarative transformations (SQL, Spark DataFrames, Beam pipelines) are easier to reason about, test, and optimize than imperative code. Idempotency guarantees that re‑running a step produces the same result, which is essential for fault tolerance and replayability.

How to apply it

Idempotent writes – When writing to a sink, use upserts or merge operations that can safely be retried earlier, expanding on the main idea with examples, analysis, or additional context. Use this section to elaborate on specific points, ensuring that each sentence builds on the last to maintain a cohesive flow. You can include data, anecdotes, or expert opinions to reinforce your claims. Keep your language concise but descriptive enough to keep readers engaged. This is where the substance of your article begins to take shape.

Avoid side‑effects – Do not write to external systems inside a transformation unless it is part of the pipeline’s output.

Use built‑in functions – Prefer framework‑provided operators over custom UDFs; they are usually better optimized.

3. Design for Failure, Not Just Success

Why it matters

In production, failures are inevitable—network glitches, schema drift, or downstream outages. A pipeline that gracefully recovers from these events reduces downtime and data loss.

How to apply it

- Checkpointing & State Management – Persist the state of streaming jobs so they can resume from the last successful point.

- Back‑pressure Handling – Configure consumers to slow down producers when the downstream is saturated.

- Retry & Dead‑Letter Queues – Automatically retry transient errors and route irrecoverable messages to a separate queue for manual inspection.

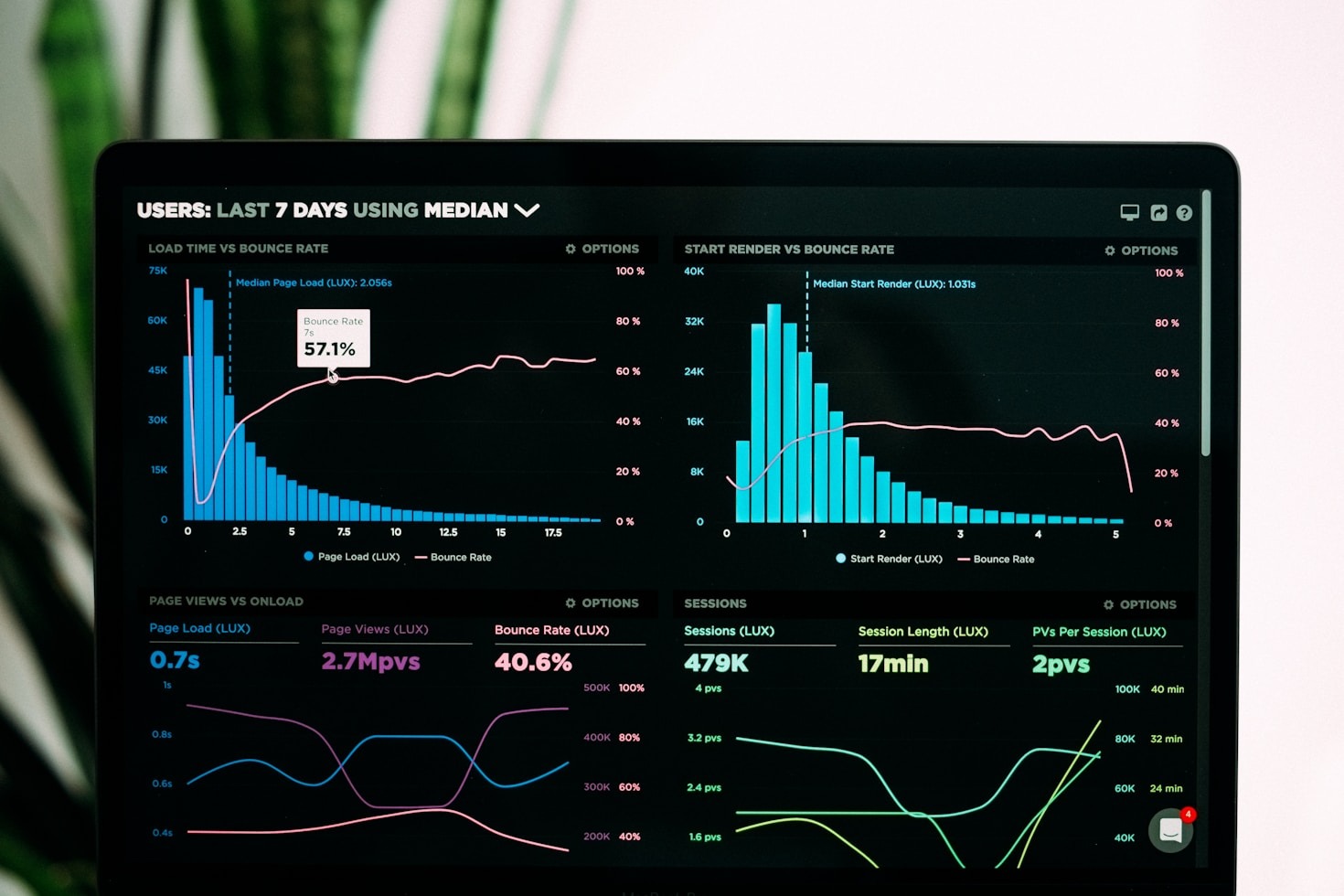

4. Prioritize Observability and Monitoring

Why it matters

Visibility into the pipeline’s health is the difference between a silent failure and a rapid response. Observability lets you detect anomalies, understand root causes, and prove compliance.

How to apply it

- Metrics – Track ingestion rates, processing latency, error counts, and resource utilization.

- Tracing – Use distributed tracing to follow a single record through the entire pipeline.

- Logging – Emit structured logs that can be correlated with metrics and traces.

- Alerting – Set thresholds for critical metrics and integrate with incident management tools.

5. Automate Everything That Can Be Automated

Why it matters

Manual interventions are error‑prone and slow. Automation accelerates delivery, enforces consistency, and frees engineers to focus on value‑added work.

How to apply it

- Infrastructure as Code – Use Terraform, Pulumi, or CloudFormation to provision clusters, storage, and networking.

- CI/CD Pipelines – Test, lint, and deploy pipeline code automatically.

- Data Quality Gates – Run automated tests (e.g., Great Expectations, Deequ) before data lands in production.

- Self‑Healing – Trigger remediation workflows (e.g., restart a failed job, roll back a bad schema change) based on observability signals.

Bringing It All Together

A robust data pipeline is a living system that balances performance with reliability, scalability with maintainability, and innovation with compliance. By treating data as a product, writing declarative and idempotent transformations, designing for failure, investing in observability, and automating wherever possible, you create a foundation that can evolve with your organization’s growth and the ever‑changing data landscape.

Start small—pick one principle, embed it into your next pipeline, and iterate. Over time, the cumulative effect will be a resilient, high‑velocity data infrastructure that powers smarter decisions, faster insights, and a competitive edge.

Leave a Reply